When dealing with machine learning, and especially when dealing with deep learning and neural networks, it is preferable to use a graphics card to handle the processing, rather than the CPU. Even a very basic GPU is going to outperform a CPU when it comes to neural networks.

But which GPU should you buy? There is a lot of choice, and it can get confusing and expensive very quickly. I will therefore try to guide you on the relevant factors to consider, so that you can make an informed choice based on your budget and particular modelling requirements.

Why is a GPU preferable over a CPU for Machine Learning? #

A CPU (Central Processing Unit) is the workhorse of your computer, and importantly is very flexible. It can deal with instructions from a wide range of programs and hardware, and it can process them very quickly. To excel in this multitasking environment a CPU has a small number of flexible and fast processing units (also called cores).

A GPU (Graphics Processing Unit) is a little bit more specialised, and not as flexible when it comes to multitasking. It is designed to perform lots of complex mathematical calculations in parallel, which increases throughput. This is achieved by having a higher number of simpler cores, sometimes thousands, so that many calculations can be processed all at once.

Image by Ahmed Gad from Pixabay

This requirement of multiple calculations being carried out in parallel is a perfect fit for:

- graphics rendering - moving graphical objects need their trajectories calculated constantly, and this requires a large amount of constant repeat parallel mathematical calculations.

- machine and deep learning - large amounts of matrix/tensor calculations, which with a GPU can be processed in parallel.

- any type of mathematical calculation that can be split to run in parallel.

I think the best summary I have seen is on Nvidia's own blog:

| CPU | GPU |

|---|---|

| Central Processing Unit | Graphics Processing Unit |

| Several cores | Many cores |

| Low latency | High throughput |

| Good for serial processing | Good for parallel processing |

| Can do a handful of operations at once | Can do thousands of operations at once |

Tensor Processing Unit (TPU) #

With the boom in AI and machine/deep learning there are now even more specialised processing cores called Tensor cores. These are faster and more efficient when performing tensor/matrix calculations. Exactly what you need for the type of mathematics involved in machine/deep learning.

Although there are dedicated TPUs, some of the latest GPUs also include a number of Tensor cores, as you will see later in this article.

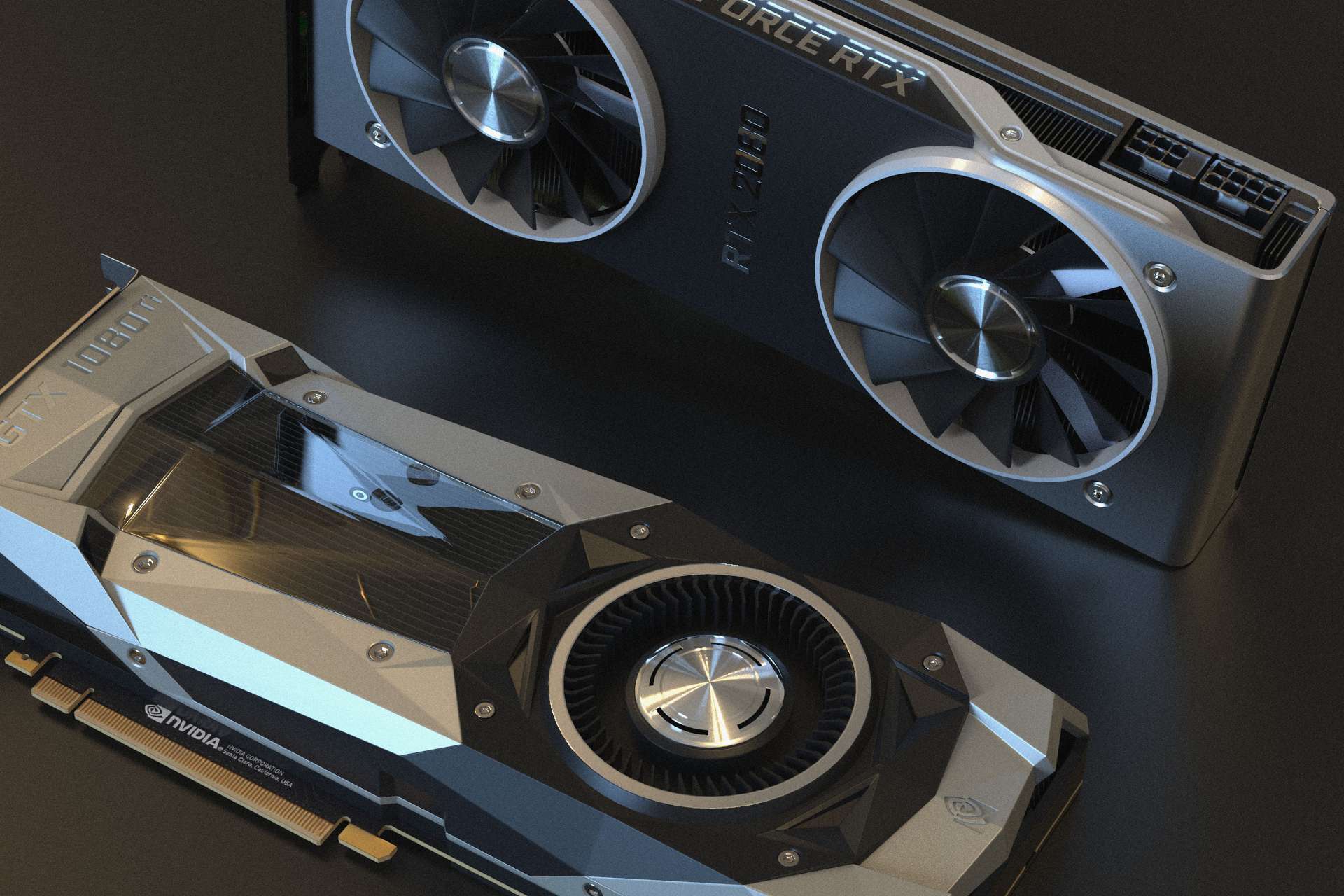

Nvidia vs AMD #

This is going to be quite a short section, as the answer to this question is definitely: Nvidia

You can use AMD GPUs for machine/deep learning, but at the time of writing Nvidia's GPUs have much higher compatibility, and are just generally better integrated into tools like TensorFlow and PyTorch.

I know from my own experience that trying to use an AMD GPU with TensorFlow requires using additional tools (ROCm), which tend to be a bit fiddly, and sometimes leave you with a not quite up to date version of TensorFlow/PyTorch, just so you can get the card working.

This situation may improve in the future, but if you want a hassle free experience, it is better to stick with Nvidia for now.

GPU Features #

Picking out a GPU that will fit your budget, and is also capable of completing the machine learning tasks you want, basically comes down to a balance of four main factors:

- How much RAM does the GPU have?

- How many CUDA and/or Tensor cores does the GPU have?

- What chip architecture does the card use?

- What are your power draw requirements (if any)?

The subsequent sub sections will look into each of these areas, and hopefully give you a better grasp of what matters to you.

GPU RAM #

The answer to this is the more the better! Very helpful I know...

It really comes down to what you are modelling, and how big those models are. For example if you are dealing with images, videos or audio, then by definition you are going to be dealing with quite a large amount of data, and GPU RAM will be an extremely important consideration.

There are always ways to get around running out of memory (e.g. reducing the batch size). However, you want to limit the amount of time you have to spend messing about with code just to get around memory requirements, so a good balance for your requirements is essential.

Image by OpenClipart-Vectors from Pixabay

As a general rule of thumb I would suggest the following:

4GB - The absolute minimum I would consider, this will work well in most cases as long as you are not dealing with overly complicated models, or large amounts of images, videos or audio. Great if you are just starting out and want to experiment without breaking the bank. The improvements over a CPU will still be night and day.

8GB - I would say this is a good middle ground. You can get most tasks done without hitting RAM limits, but you will have problems with more complicated models with images, video or audio.

12GB - This I would describe as optimal without being ridiculous. You can deal with most larger models, even those that deal with images, video or audio.

12GB+ - The more the better, you will be able to handle larger datasets and bigger batch sizes. However, beyond 12GB is where the prices really start to ramp up.

In my experience I would say it is better, on average, to opt for a card that is 'slower' with more RAM, if the cost is the same. Remember, the advantage of a GPU is high throughput, and this is heavily dependent on available RAM to feed the data through the GPU.

CUDA Cores and Tensor Cores #

This is fairly simple really. The more CUDA (Compute Unified Device Architecture) cores / Tensor cores the better.

The other items such as RAM and chip architecture (see the next section) should probably be considered first, and then look at cards with the highest number of CUDA/tensor cores from your narrowed down selection.

For machine/deep learning Tensor cores are better (faster and more efficient) than CUDA cores. This is due to them being designed precisely for the calculations that are required in the machine/deep learning domain.

The reality is it doesn't matter a great deal, CUDA cores are plenty fast enough. If you can get a card which includes tensor cores too, that is a good plus point to have, just don't get too hung up on it.

Moving forward you will see "CUDA" mentioned a lot, and it can get confusing, so to summarise:

- CUDA cores - these are the physical processors on the graphics cards, typically in their thousands.

- CUDA 11 - The number may change, but this is referring to the software/drivers that are installed to allow the graphics card to work. New releases are made regularly, and it can be installed like any other software.

- CUDA generation (or compute capability) - this describes the capability of the graphics card in terms of it's generational features. This is fixed in hardware, and so can only be changed by upgrading to a new card. It is distinguished by numbers and a code name. Examples: 3.x [Kepler], 5.x [Maxwell], 6.x [Pascal], 7.x [Turing] and 8.x [Ampere].

Chip architecture #

This is actually more important than you might think. As I mentioned earlier we are basically discarding AMD at this point, so in terms of generations of chip architecture we only have Nvidia.

The main thing to look out for is the "Compute Capability" of the chipset, sometimes called "CUDA generation". This is fixed for each card, so once you buy the card you are stuck with whatever compute capability the card has.

It is important to know what the compute capability of the card is for two main reasons:

- significant feature improvements

- deprecation

Significant feature improvement #

Let's start with a significant feature improvement. Mixed precision training:

There are numerous benefits to using numerical formats with lower precision than 32-bit floating point. First, they require less memory, enabling the training and deployment of larger neural networks. Second, they require less memory bandwidth which speeds up data transfer operations. Third, math operations run much faster in reduced precision, especially on GPUs with Tensor Core support for that precision. Mixed precision training achieves all these benefits while ensuring that no task-specific accuracy is lost compared to full precision training. It does so by identifying the steps that require full precision and using 32-bit floating point for only those steps while using 16-bit floating point everywhere else.

It is only possible to use mixed precision training if you have a GPU with compute capability 7.x (Turing) or higher. That is basically the RTX 20 series or newer, or the RTX, "T" or "A" series on desktop/server.

The main reason mixed precision training is such an advantage when considering a new graphics card is that it lowers RAM usage, so by having a slightly newer card your RAM requirements are reduced.

Deprecation #

Then we move to the other end of the scale.

If you have particularly high RAM requirements, but not enough money for a high end card, then it may be the case that you will opt for an older model of GPU on the second hand market.

However, there is quite a large downside...the card is end of life.

A prime example of this is the Tesla K80, which has 4992 CUDA cores and 24GB of RAM. It originally retailed at about USD 7000.00 back in 2014. I just had a look on e-bay in the UK, and it is going for anything from GBP 130.00 (USD/EUR 150) to GBP 170.00 (USD/EUR 195)! That is a lot of RAM for such a small price.

However, there is quite a large downside. The K80 has a compute capability of 3.7 (Kepler), which is deprecated from CUDA 11 onwards (the current CUDA version is 11). That means the card is end of life, and won't work on future releases of CUDA drivers. A great pity really, but something to bear in mind, as it is very tempting.

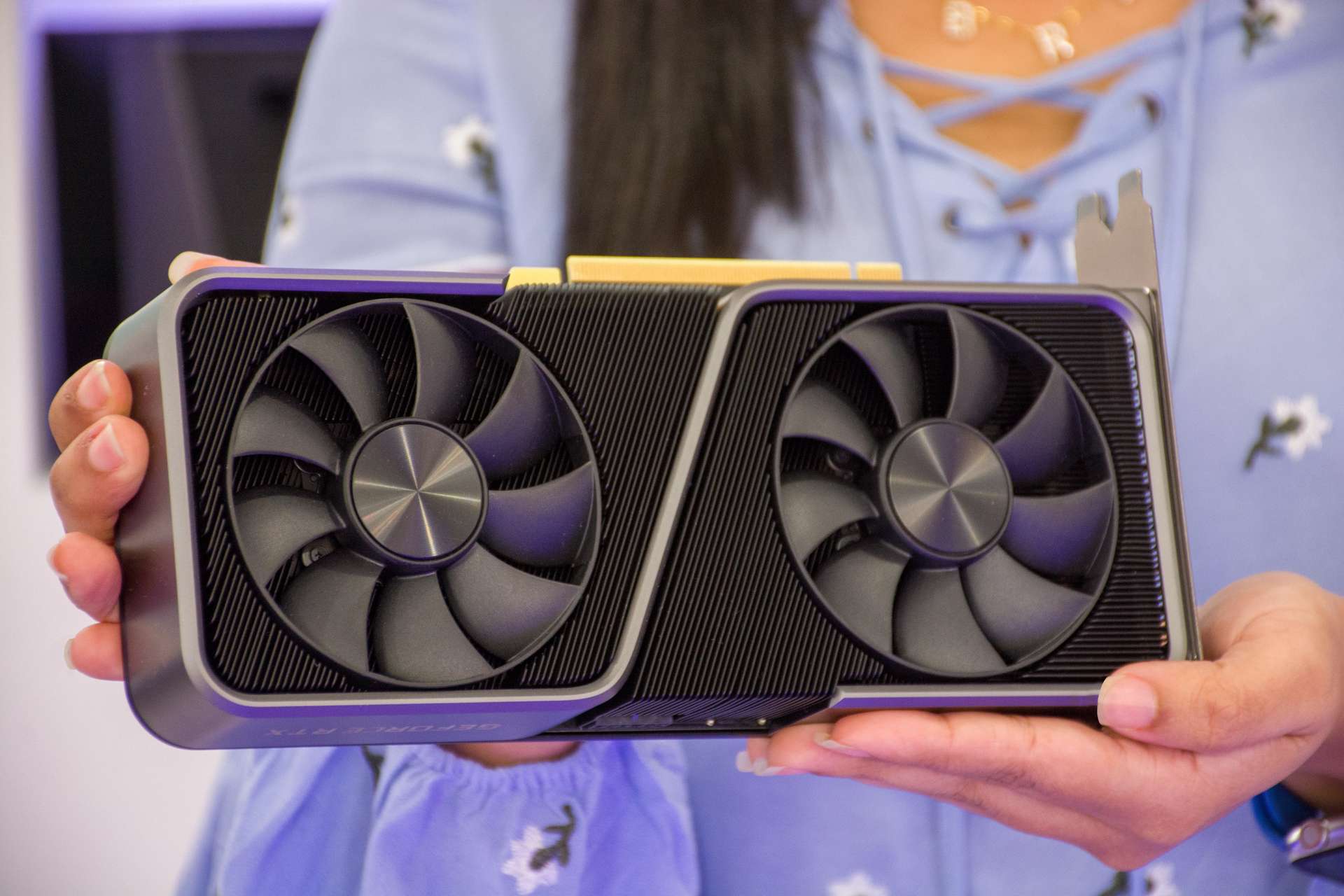

Graphics Cards vs Workstation/Server Cards #

Nvidia basically splits their cards into two sections. There are the consumer graphics cards, and then cards aimed at desktops/servers (i.e. professional cards).

There are obviously differences between the two sections, but the main thing to bear in mind is that the consumer graphics cards will generally be cheaper for the same specs (RAM, CUDA cores, architecture). However, the professional cards will generally have better build quality, and lower energy consumption.

Photo by Elias Gamez on Pexels

Looking at the higher end (and very expensive) professional cards you will also notice that they have a lot of RAM (the RTX A6000 has 48GB for example, and the A100 has 80GB!). This is due to the fact that they are typically aimed directly at 3D modelling, rendering, and machine/deep learning professional markets, which require high levels of RAM. Again, if you have those sort of requirements you will likely not need advice on what to purchase!

In summary, you will likely be best sticking to the consumer graphics market, as you will get a better deal.

Recommendation #

Finally, I thought I would actually make some recommendations based on budget and requirements. I have split this into three sections:

- Low budget

- Medium budget

- High budget

Please bear in mind the high budget does not consider anything beyond top end consumer graphics cards. If you really have a very high budget you should be looking at the professional card series, such as the Nvidia A series of cards which can run costs up into many thousands.

I have included one card that is only available on the second hand market in the low budget section. This is mainly because I think in the low budget section it is worth considering second hand cards.

I have also included professional desktop series cards in the mix as well (T600, A2000 and A4000). You will notice that some of the specs are a little worse than comparable consumer graphics cards, but power draw is significantly better, which may be of concern for some people.

Low Budget (Less than GBP 220 - EUR/USD 250) #

| GPU | RAM | Compute Capability | CUDA Cores | Tensor Cores | Mixed Precision | Power Consumption |

|---|---|---|---|---|---|---|

| GTX 1070 / 1070ti / 1080 | 8GB | 6.1 (Pascal) | 1920 / 2432 / 2560 | 0 | No | 150W / 180W / 180W |

| GTX 1660 | 6GB | 7.5 (Turing) | 1408 | 0 | Yes | 120W |

| T600 | 4GB | 7.5 (Turing) | 640 | 0 | Yes | 40W |

Medium Budget (Less than GBP 440 - EUR/USD 500) #

| GPU | RAM | Compute Capability | CUDA Cores | Tensor Cores | Mixed Precision | Power Consumption |

|---|---|---|---|---|---|---|

| RTX 2060 12GB | 12GB | 7.5 (Turing) | 2176 | 272 (Gen 2) | Yes | 184W |

| RTX A2000 6GB | 6GB | 8.6 (Ampere) | 3328 | 104 (Gen 3) | Yes | 70W |

| RTX 3060 12GB | 12GB | 8.6 (Ampere) | 3584 | 112 (Gen 3) | Yes | 170W |

High Budget (Less than GBP 1050 - EUR/USD 1200) #

| GPU | RAM | Compute Capability | CUDA Cores | Tensor Cores | Mixed Precision | Power Consumption |

|---|---|---|---|---|---|---|

| RTX 3080 Ti | 12GB | 8.6 (Ampere) | 10240 | 320 (Gen 3) | Yes | 350W |

| A4000 16GB | 16GB | 8.6 (Ampere) | 6144 | 192 (Gen 3) | Yes | 140W |

| RTX 3090 | 24GB | 8.6 (Ampere) | 10496 | 328 (Gen 3) | Yes | 350W |

Other Options #

If you have decided the outlay and bother of getting a graphics card is not for you, you can always take advantage of Google Colab, which gives you access to a GPU for free. Just bear in mind there are time limitations to this, and the GPU is automatically assigned, so having your own graphics card is usually worth it in the long run.

At the time of writing the following GPUs are available through Colab:

| GPU | RAM | Compute Capability | CUDA Cores | Tensor Cores |

|---|---|---|---|---|

| Tesla K80 | 12GB | 3.7 | 2496 | 0 |

| Tesla P100 | 16GB | 6.0 | 3584 | 0 |

| Tesla T4 | 16GB | 7.5 | 2560 | 320 |

Note: I mentioned earlier in the article that the K80 has 24GB of RAM and 4992 CUDA cores, and it does. However, the K80 is an unusual beast in that it is basically two K40 cards bolted together. This means that when you use a K80 in Colab, you are actually given access to half the card, so only 12GB and 2496 CUDA cores.

Conclusion #

There is a lot of choice out there, and it can be very confusing. Hopefully you have come away from this article with a much better idea of what will fit your particular requirements.

Is there a particular graphics card that you think deserves a special mention? Let me know in the comments.

🙏🙏🙏

Since you've made it this far, sharing this article on your favorite social media network would be highly appreciated. For feedback, please ping me on Twitter.

...or if you want fuel my next article, you could always:

Published